When it comes to game design, I tend to ascribe to the idea that the artistic text of a game is more than its code: it’s the experience that emerges between the game and the player — an invisible sphere of energy that crackles back and forth like a Dragon Ball Z beam struggle, with things like the screen, the controller, and your fingers serving as the conduit.

(For more on that idea, check out “Videogames are a Mess” by Ian Bogost.)

And just like a beam struggle, I think the balance of that relationship shifts from moment to moment. Sometimes it tips more towards the software. Like, say, when you’re watching a cutscene. Other times it tips more towards the player. Like, say, when you’re thinking about your next move in a turn-based RPG.

It’s the latter one I want to talk about today, since I’ve never seen it discussed much before, and since I recently released a game that relies on it heavily. And since half the fun of writing about game design is getting to make up new words, I’m gonna call this “mindplay” because it’ll help with SEO and sounds like a fun kink.

Note: this post contains spoilers for Pumpkin.jpg. If you plan to play it, you should do so before reading this.

Defining Mindplay

For me, the essence of mindplay is a player staring at the screen with a furrowed brow, controller resting flat on the coffee table, while they sort through a problem within their own mind.

It’s the moment in a Bioware game when you’re presented with a nettlesome ethical choice. Or your turn in a chess game against the AI. Or any time you’re doing a puzzle during a game like The Witness.

I think to qualify as mindplay, the experience of a particular moment has to be happening entirely, or almost entirely, within the mind of the player. In essence, the game is on pause, and only resumes when the player is ready for it to do so — usually when they’ve either arrived at their answer, or want to solicit some more information from the game.

At the risk of sounding like a pretentious white guy hipster (especially after referencing Ian Bogost and John Blow in the first couple paragraphs) there’s a move in Tai Chi that I think is a useful analogy here. It’s called Grasp Sparrow’s Tail.

During Grasp Sparrow’s Tail, the practitioner receives incoming force, draws it inward toward themselves, swirls it around a bit, then sends it back out.

Likewise, during mindplay, you receive a problem, draw it into your own mind, ruminate a bit, then pick up the controller and send your answer back.

In the process, I think the balance of the artistic text tips from the software, to you, and back again — a tremendously weird and challenging sort of interaction to write and design for.

So how do we get our arms around this and use it to our advantage as writer/designers? I think it starts with accepting one simple premise:

Games are Inherently Subjective

I really want to stress this, because I think it’s key to understanding how a lot of games approach narrative. In my opinion, you cannot remove the player from the artistic text of a game. You can’t discuss a game without discussing the player’s experience of it, and if the player is an essential component, that means the particularities of a person can influence their experience, and therefore the text of a game.

I think this is a big part of why Let’s Plays are so popular: it’s the only way, short of playing a game yourself, to get that observer-observed subjective experience thing. (And if the person playing happens to be an entertaining internet personality whose tastes and idiosyncrasies you’re already familiar with, all the better.)

Every person is utterly unique.

Therefore, every person experiences every game—and every moment within a game—in a way that’s utterly unique to them.

My experience of Final Fantasy IX as someone who grew up playing it over and over is seismically different than that of a ten-year-old playing it for the first time today.

A gay black woman’s experience of a flirty line or choice in Dragon Age: Inquisition is going to be different than that of a straight white man.

These aren’t new ideas. People have been saying things like this about books for hundreds of years. It’s probably true for all media. I just think it’s extra true for games.

Mindplay in Pumpkin.jpg

In Pumpkin.jpg, I knew I wanted to rely on mindplay, even if I hadn’t fully articulated the concept to myself yet.

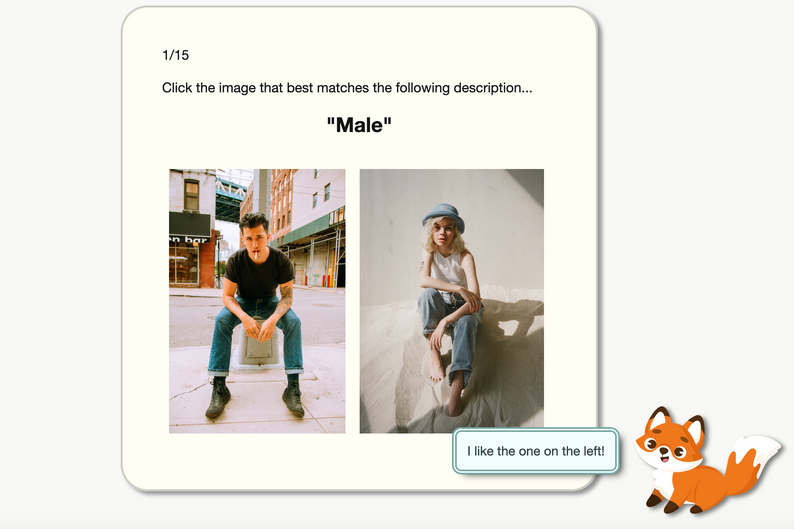

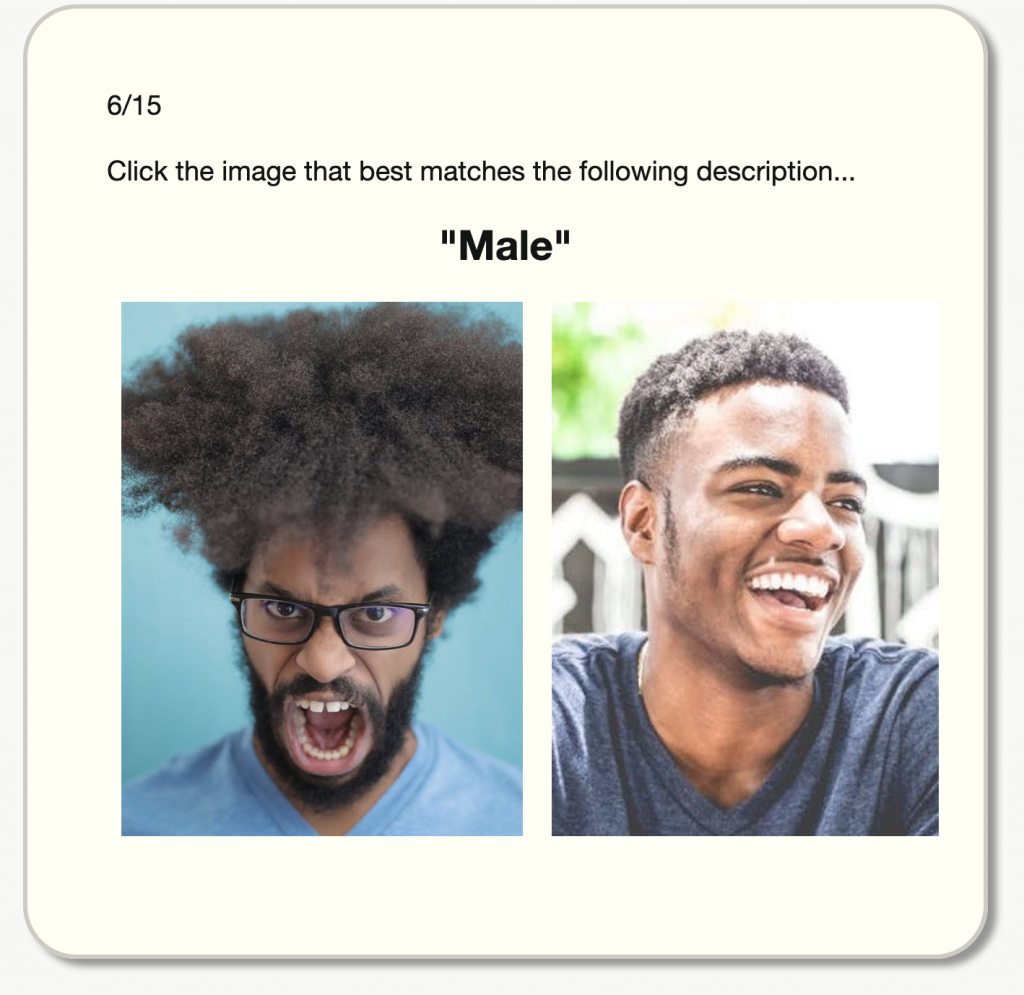

In the game, you’re given a simple task: sort through images of people’s faces, based on seemingly simple criteria, to help train a corporate facial recognition AI. Here’s a typical problem:

As the game progresses, it becomes increasingly obvious that the datasets and criteria you’re being given are arbitrary bullshit, and the ethical ramifications of trying to sort people into boxes based on anything so reductive becomes increasingly punch-you-in-the-face obvious.

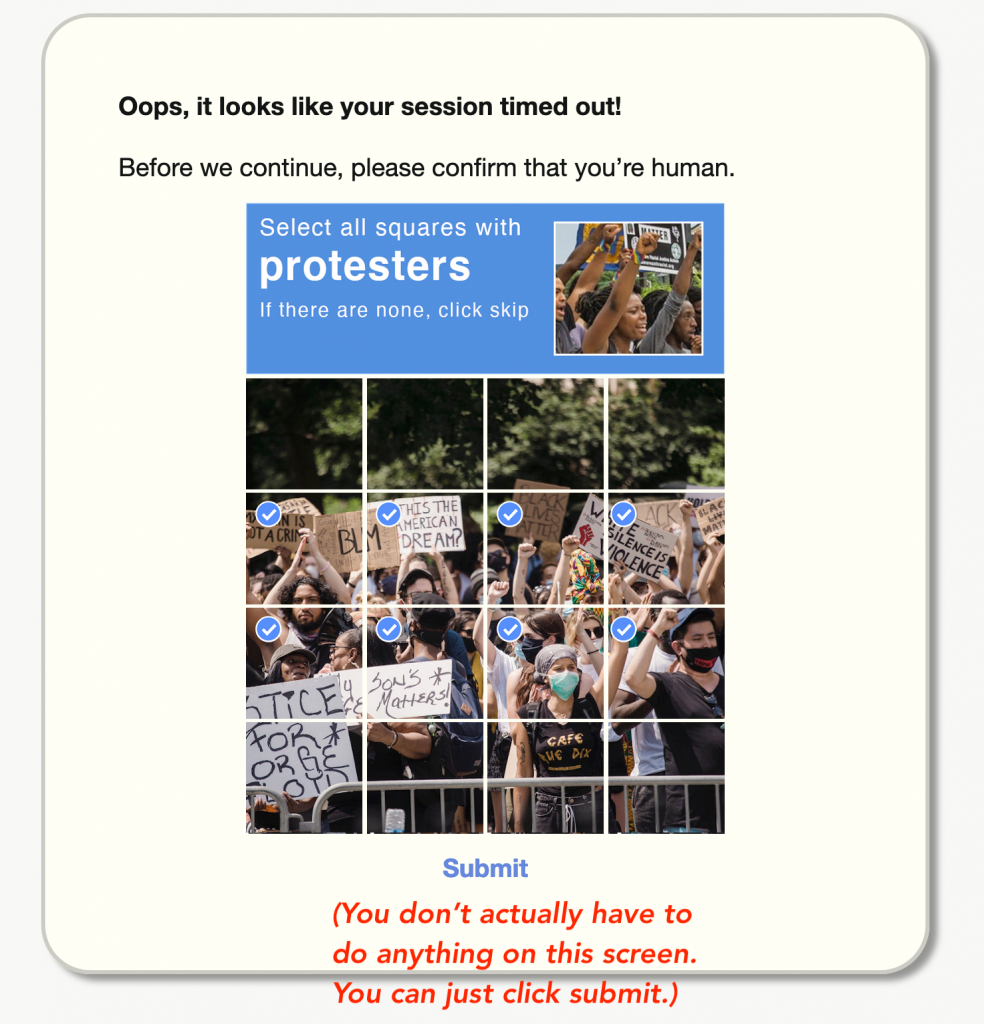

It’s important to note here that there’s basically zero feedback from the game itself in this regard. At a purely mechanical level, there is almost no interaction between game and player. Your answers aren’t timed. In fact, the game doesn’t even record them! During some sections, like the image captcha bits, you’re actually not even required to give an answer.

This was an intentional artistic choice on my part, both because I write branching narrative for a living and wanted to explore something different, and because I figured attaching any mechanics to answers in a game about how you can’t mechanically quantify people like this would be a little thematically discordant.

Pumpkin.jpg isn’t about what’s going on inside the game: it’s about what’s going on inside your head while you play it.

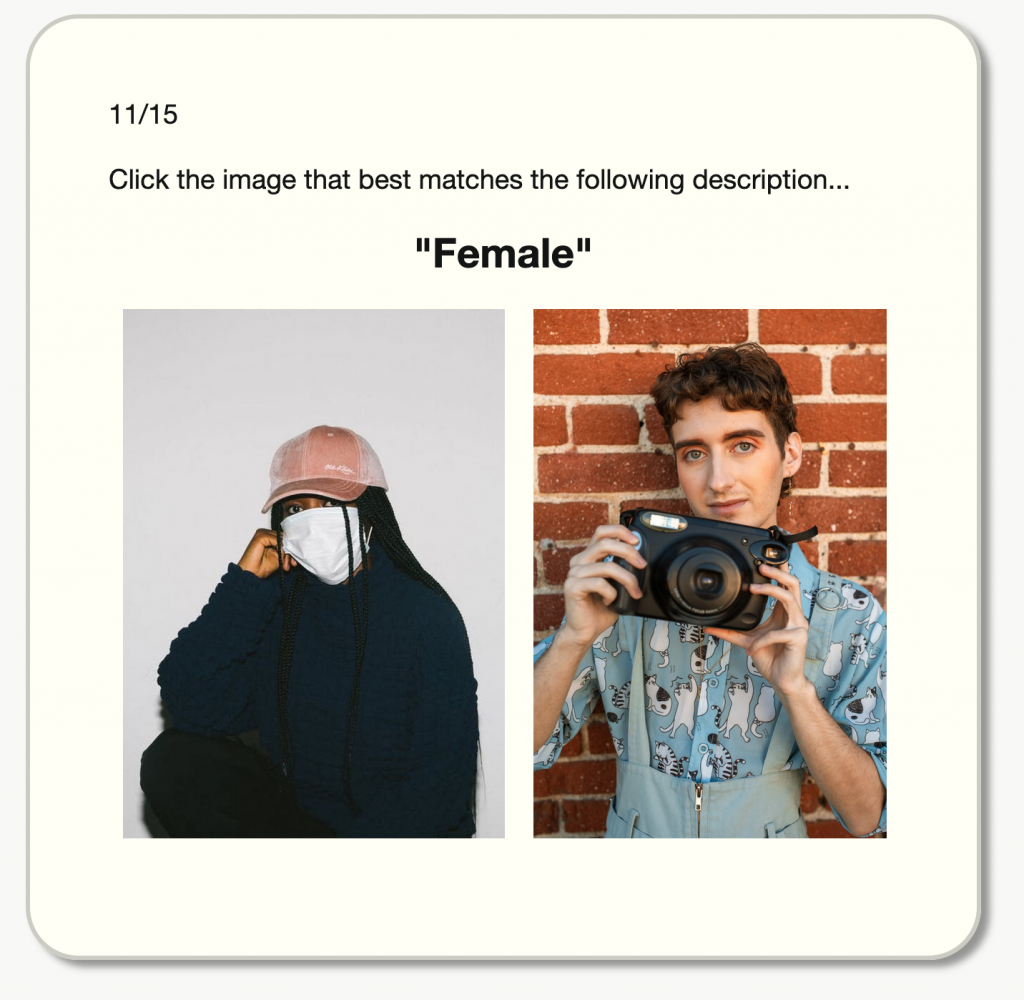

After you’ve played through the game at least once, hopefully it’s fairly obvious what I was going for. In a choice like this, I present the player with two options.

There is no right answer here. It’s a nonsensical question.

The “right” answer of course—and the one I’m happy to see the majority of players tend to gravitate toward pretty quickly—is the invisible third option that arises during mindplay: recognition of the false dichotomy, followed by an immediate rejection of it. The player takes the problem in, swishes it around in their head, realizes that both options feel inherently wrong, ask themselves why that is, and arrive at whatever idea I’m actually trying to convey with that choice.

(In this case, that idea is something like: “Society considers some emotions more masculine than others, which doesn’t actually make any sense, and that’s a social bias that can easily get reflected into facial recognition algorithms if they try to interpret someone’s gender by analyzing their expression. Also, it gets exponentially more fucked up if there’s racist bias involved.”)

Finally, because I don’t give them any way to expressly reject the question altogether, they opt for the next best thing:

Intentionally picking the “wrong” option in an attempt to sabotage the system, as a punk “fuck you” to Cranio, the game’s fictional facial recognition corporation. (Most prominently embodied by Faye, the distastefully chipper Clippy-like helper mascot who lives in the lower right-hand corner of the screen.)

This is where I want the player to be at, mentally and emotionally, by the time they finish playing: feeling defensive toward facial recognition algorithms, suspicious of the motives behind them, conditioned to see past the cute corporate vector art and faux-inclusive ad copy, and ultimately radicalized into sabotaging systems like this by any means available, no matter how small or petty.

Hold on. The player is thinking…

So what makes for compelling mindplay?

The key ingredient, I think, is creating a moment of “puzzlement in frozen time” — treating the player’s mind like a computer you’re intentionally trying to overclock, while requiring as little realtime interaction as possible.

To achieve this, ideally I think you want to frontload the player with a bunch of categorically different kinds of information, then present them with a question or problem that invites them to smash those disparate bits together, creating a combinatorial explosion of new ideas and possibilities. Kinda like a particle collider. Then, once they’re lost in the soup of ideas, you ask them to deliver a single, simple conclusion, often using a very small set of inputs.

For narrative games, I think there needs to be a sense that none of the available options is immediately superior to the others; “there is no right answer here; the implications of these choices are different but equal”. I think it also helps if the choice feels freighted with consequence, and the sense that, once given, your answer can’t be easily revoked.

In the Mass Effect series, you’re frequently pitted against enemies called the Geth: bipedal robots who share a hive-mind but are seemingly non-sentient. Mostly they try to murder you on sight.

Deep into Mass Effect 2, however, you get a Geth party member, Legion, and learn that the Geth are indeed sentient. In fact, they’re divided; the hive-mind has fractured into two factions. One believes in peace. The other wants to exterminate all organic life. That’s the one you’ve been fighting all this time. Legion likens it to a thought virus — but, crucially, never goes so far as to imply that the Geth who have chosen it lack free will.

Some Geth just really, really fuckin hate humans.

Eventually, if you embark on Legion’s special loyalty mission, you’ll find yourself face to face with the control panel for the Geth hive-mind, where you’re confronted with a knotty ethical conundrum:

You can rewrite the bad Geths’ consciousness. Or you can erase them from the hive-mind.

It feels like an essay question on a really weird philosophy exam. When faced with robots who have free will, yet choose to be nazis, is it ethical to forcibly reeducate them? Or is it more ethical to painlessly erase them? How about if the reeducation isn’t fool-proof; does that change your calculus at all? Why or why not?

Some may disagree, but to me, this is a great example of compelling game writing. The first time I played Mass Effect 2, I stared at this screen for a solid 15 minutes. I actually got up and walked away from the game, mulling it over while I made a cup of coffee.

Think about that. The software handed me the artistic text of the game experience, and then I literally picked it up and carried it out of the room with me.

That’s wild. It’s also very cool. In my opinion, more games should consider exploring experiences like that, because I think it’s a design space with a lot of untapped potential. The fact that one of the best examples I can think of comes from a AAA game rather than some obscure indie title is downright bizarre.

And for the record, I do think that choice has a specific thematic point it wants you to arrive at, regardless of which option you ultimately pick: the idea that genocide isn’t always a goal unto itself, but often presents itself as the “solution” to a “problem” — and you might be rattled to discover that you’re not as immune to its proponents’ persuasive arguments as you might think, even if only insofar as they’re capable of making you hesitate for a moment in a videogame.

(Which is somewhat undercut by the fact that the game has an achievement for headshotting 30 enemies, or ME3 has an achievement for killing five thousand. But hey, that’s videogames, baybeeeeee~)

In any case, by the time you make your decision, you’ve crystallized a clearer, more articulated understanding of your own internal ethics. And if you want to see this demonstrated, get two Mass Effect fans together in a room, ask them which choice they picked when it came to the Geth, then watch them explode into full-on Oxford debate complete with supporting arguments and prepared refutations.

Phew!

Now then: for less narrative, more game-y games that have different input schema than a pair of dialogue options—like the maze puzzles in The Witness—I think the method for achieving good mindplay might actually be the exact opposite.

Instead of striving for indecision arising from weighty irrevocable choices, I think you want to strive for indecision by virtue of having too much freedom. Sort of like analysis paralysis, but the mindful version, intentionally induced for a specific artistic intent. Here, you want the player to feel like: this is the problem, and there’s only one particular input or sequence of inputs that’s correct, and it’s their task to find it, and they have all the tools they need to do so, on the screen, right now.

I think it also helps, in games like this, to rely on content bottlenecks, and if possible, give the player lots of possible inputs. Almost too many. The player might think: “There’s an infinite number of things I could do here, but only one right thing, and I’m not getting through this door until I figure it out. So I just need to sit here and stare at it for a while.”

Wrapping Up

I think mindplay can feel scary for a lot of game designers. There’s a certain metric by which, if the player stops interacting with your game for thirty seconds: uh oh, hey, that’s not good; let’s throw a quicktime event at them or something.

But I think if you’re confident in your message and design—and if you trust your player’s intelligence—mindplay can be powerfully engaging and a really useful design tool, particularly in games striving to persuade the player toward a particular thematic viewpoint. In my personal opinion, there’s nothing that makes a player internalize and take ownership of a concept more than the feeling of having arrived at it organically, in the course of their own meditation.

Food for thought.